CIC’s work with the Health Faculty has hit a new milestone. As nurses work in teams in an experimental version of the simulation ward, they are now generating data streams from sensors detecting their position, patient treatment actions and arousal levels. A student observer watching the team also logs more complex actions on an iPad that cannot be automatically sensed.

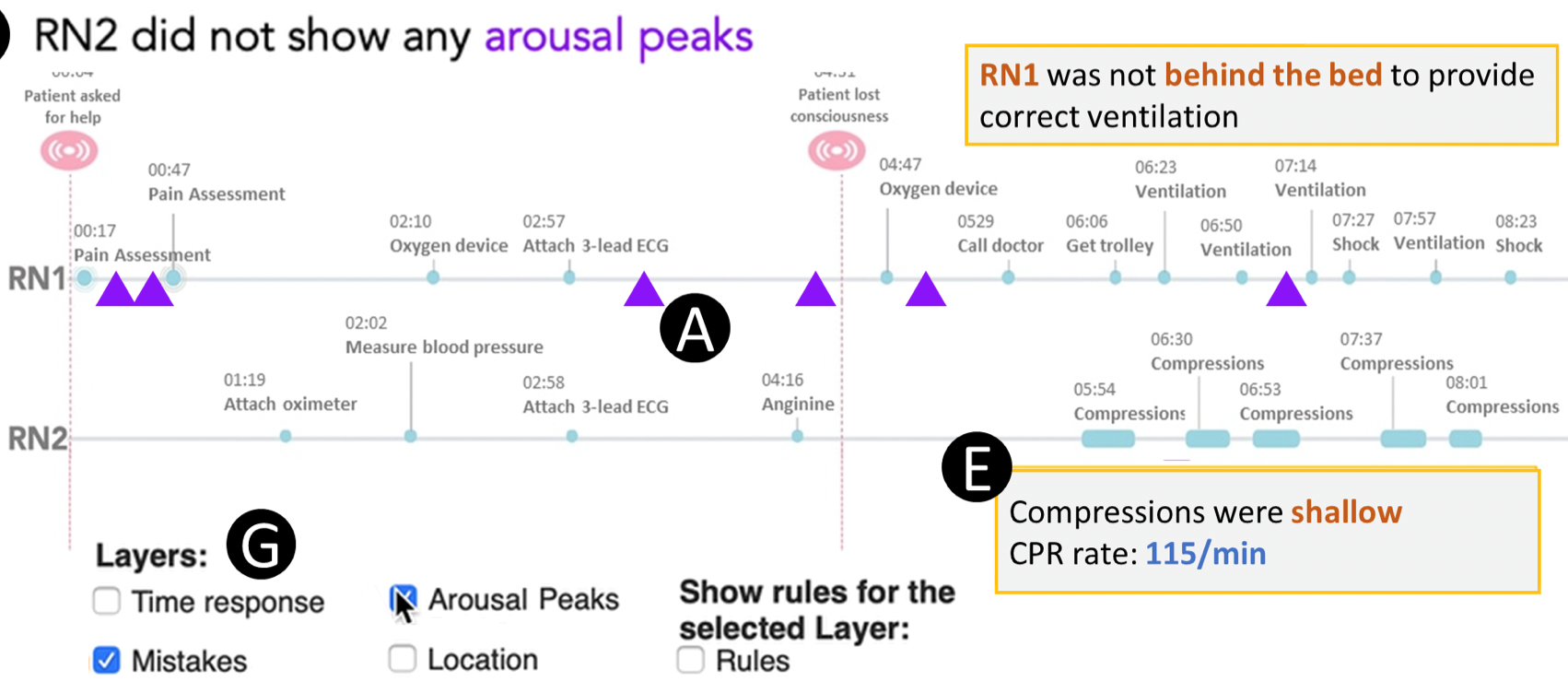

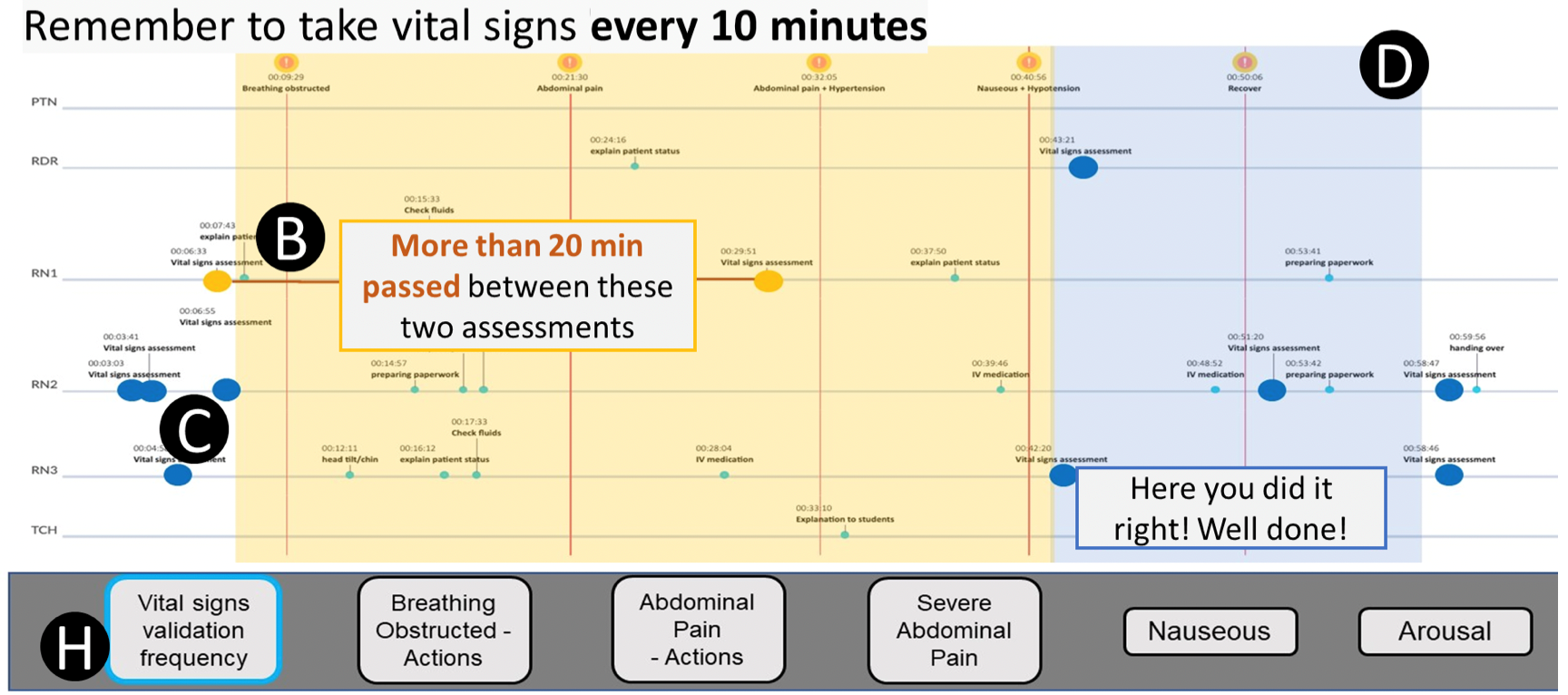

This generates a rich dataset of the team’s performance, and in an earlier story we reported the validation of a first prototype that generated at timeline showing “who did what, when”. Over the last year, significant effort has been invested in figuring out how to add additional kinds of information without overloading the display, leading to the invention of a new way to present different kinds of feedback, in different “layers”. Two examples re shown below.

Prototypes of the layered storytelling interfaces. Top: Prototype 1 – layers mistakes and arousal peaks for a team of two nurses. Bottom: Prototype 2- layer vital signs validation frequency for a team of five nurses.

These have been evaluated with both the nursing academics and students, with very encouraging feedback. While these designs were prototypes to test the design concept, they do not include any functionality that could not be programmed, so the next step is to code these.

Many congratulations to Vanessa Echeverria and Gloria Fernandez-Nieto, whose PhD research will appear in a paper with supervisors Roberto Martinez-Maldonado and Simon Buckingham Shum. This has just been accepted to CHI2020, the premier international conference in computer-human interaction!

Martinez-Maldonado, R., Echeverria, V., Fernandez-Nieto, G. & Buckingham Shum, S. (2020). From Data to Insights: A Layered Storytelling Approach for Multimodal Learning Analytics. Proc. ACM CHI 2020: Human Factors in Computing Systems (April 25–30, 2020, Honolulu, HI, USA), Paper 21, pp.1-15. https://doi.org/10.1145/3313831.3376148 [Open Access Eprint]

8 thoughts on “It’s one thing to have rich learning data, but what’s the story?”

Comments are closed.