The irony we need to iron out

One of the ironies in universities the world over is that academics secure external funding to analyse diverse organisations with their dilemmas, but more rarely tackle their own institutional challenges. Consider computer science, statistics, management, economics: often they are focused on analysing data from other organisations.

This is typically due to a mix of funding incentives, professional identity/career development, and organisational politics (it can be hard to criticise one’s own colleagues). Moreover, such is the culture gap between academics and business units, while researchers typically have at their disposal state-of-the-art analysis infrastructure to explore new analysis techniques, or horsepower to harness established infrastructure, the same university’s business units may be tackling very similar problems on dated infrastructure and/or limited staff resources, and unaware of new approaches. Conversely, academics may be unaware of the state of the art institutional research being undertaken by central units within their own or other similar institutions.

We need to be creative enough to bridge these traditional gulfs, and ‘distribute the future more evenly’

Collective intelligence initiatives

It is common in many computational fields to set Data Challenges around common datasets or tasks (TREC text information retrieval and its multimedia equivalent; the Robotics World Cup; etc.). This provides both methodological rigour for comparing approaches, plus the edge of competing against the world’s best. In commerce we see web-based initiatives such as Kaggle, in which a common dataset is released by an organisation, with a well-specified task and success criteria, monetary rewards, and a league table of competitors.

At least two other approaches are worth noting. In a Taskforce all parties coordinate rather than compete to solve an urgent problem (e.g. find an Ebola vaccine) or a grand challenge (e.g. sequence the human genome), and are resourced and rewarded for doing so. Data are gathered and shared according to agreed protocols in order to maximise the ease of pooling results from globally distributed research experiments.

A third organisational form is the Networked Improvement Community (NIC), an approach first proposed by computing pioneer Doug Engelbart in his work on “Collective IQ”, and now grounded in what has come to be known as Improvement Science, which has established a track record in health, now growing in education. In a NIC, members commit to work on a longstanding challenge in a systemic way. In contrast to a Taskforce which is typically tacking a solvable problem (Ebola is contained or cured; the human genome is sequenced), NICs often tackle “wicked” socio-technical problems, with no complete solution likely (e.g. reducing college dropouts in remedial mathematics; improvement in surgical theatre infection rates; more effective urban planning).

Joining the dots

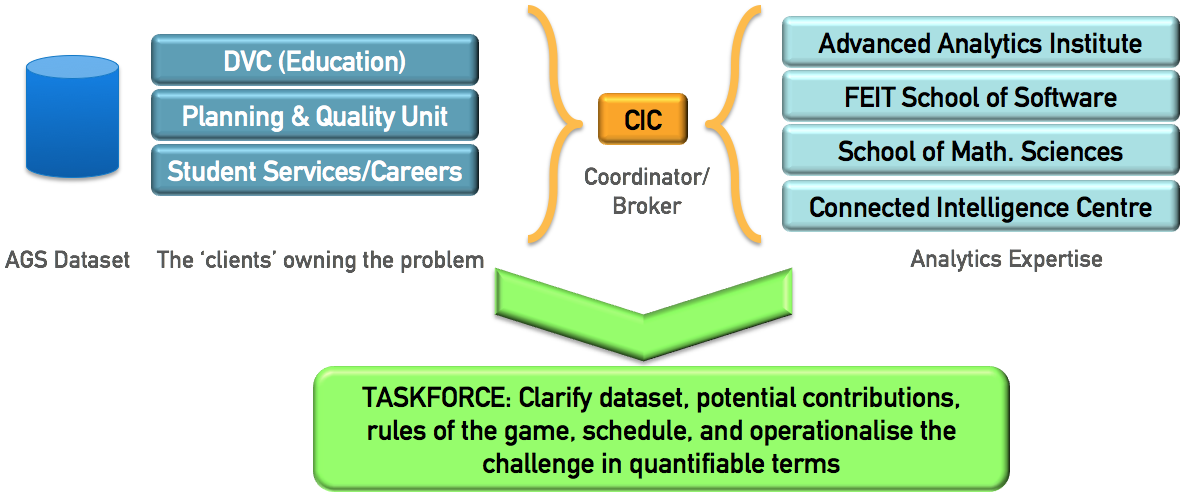

CIC has been operating not just as a source of analytics capability, but as a broker between analytics expertise and the problem owners (in this case business units with data from the Australian Graduate Survey) —

Framed as a data challenge initially, this evolved into more of a taskforce since the different groups brought very different but complimentary lenses: a range of textual, statistical and data mining approaches.

An exercise such as this is both technical and social, bridging between several different cultures — student support service, business intelligence, theoretical and applied academia and disciplines — with different expertises, ways of working, and performance indicators.

The results and process are under review within UTS, and we welcome insights from others running similar exercises.