Learning Analytics for Learner Profile Development

Chairs: Vitomir Kovanovic (UniSA) & Simon Buckingham Shum (UTS)

This session was convened at ALASI22 on 8 Dec. The agenda and slides are below, followed by the session description in the program. It was attended by researchers and practitioners from both higher education and the K-12 schools sector, and sparked some lively discussion which we hope will be the springboard to future events. The briefings were very brief, so we’ve added links for those who want to go deeper.

4.00pm Welcome!

4.05pm Lightning talks (links to slides):

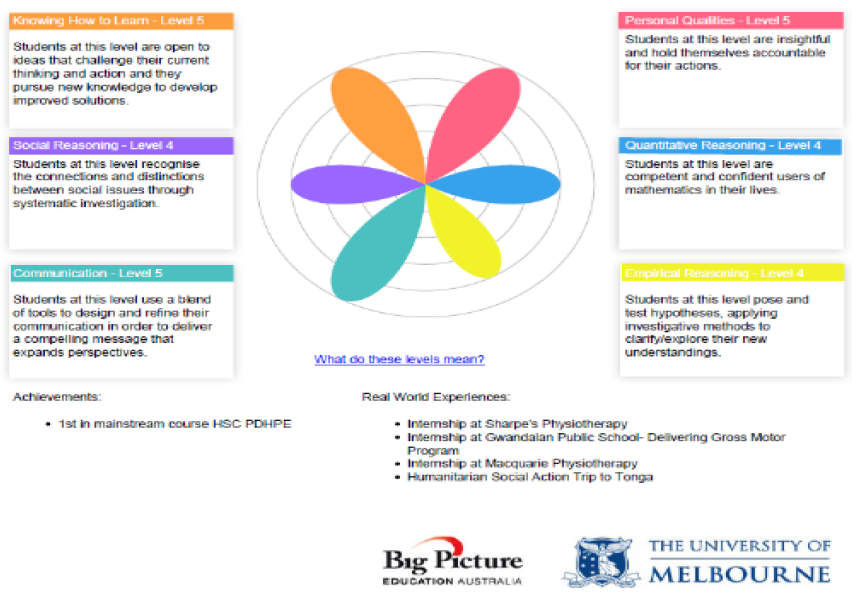

Amy Gilchrist & Christine Huynh (Liverpool Boys High School): Learner Profiles at LBHS

> Learn more: LBHS Principal Mike Saxon on using the ReView platform to track general capabilities [video] and UTS Darrall Thompson on “Marks Should Not Be the Focus of Assessment” [paper]

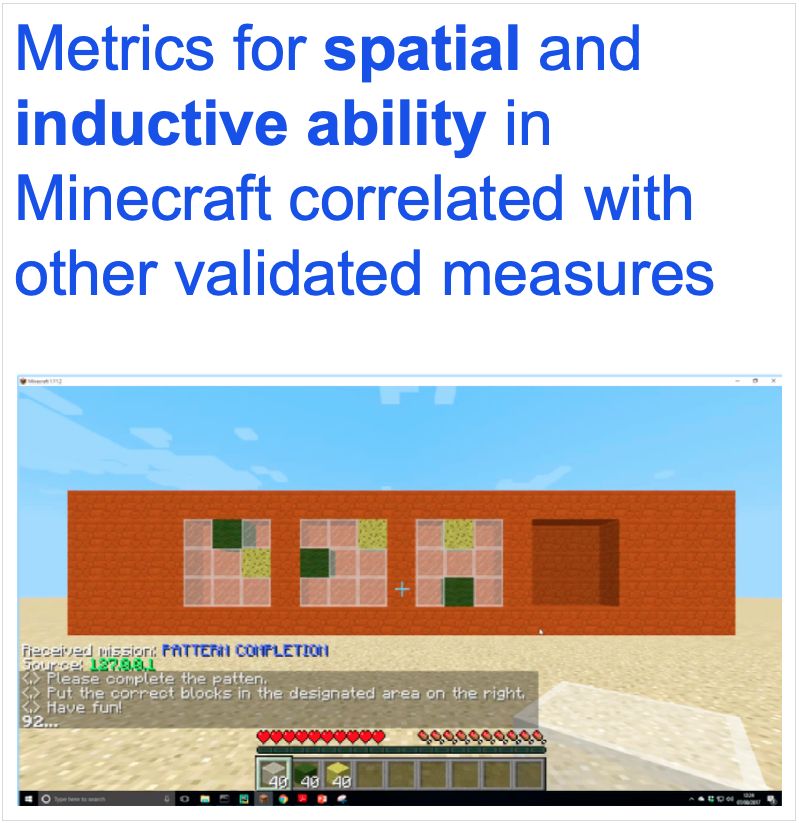

Sandra Milligan (Melbourne U): Learner Profiles and the New Metrics project

> Learn more: “How to measure learning success” [video], “Beyond ATAR” [report] and “Assessment in the Age of AI” [paper]

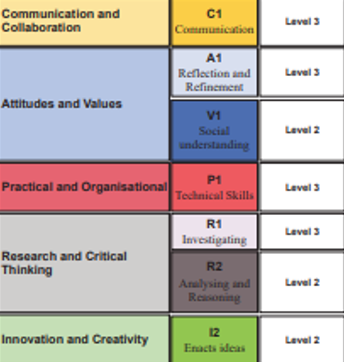

Vitomir Kovanovic (U South Australia): Using digital data to support teacher decision-making

> Learn more: Empowering Learners in the Age of AI 2022: “Learner Profiles” [panel] and keynote [video], and a GRAILE Learner Profiles panel [video]

Simon Buckingham Shum (UTS): Learning Analytics-powered Learner Profiles?

> Learn more: “Learning Analytics for 21st Century Competencies” [video], JLA special issues [2016/2020] and UK Parliamentary briefing [video].

5pm Open discussion: questions, connections, challenges…?

5.30pm Close

Session Description

Over the last few years, the term Learner Profile has been increasingly used within education to describe different approaches for collecting important information about student learning. Demographics, prior knowledge, motivation, as well as knowledge, skills and competencies, have all been used to create different learner profiles, creating an important source of information for supporting teaching and learning in various domains. At the core of such initiatives is the idea that educational experiences can be personalised by knowing enough about students and their learning processes and outcomes. With the growing number of government initiatives looking to use learner profiles to provide a better view of student learning, there is an increasing need to better understand how such approaches can be effectively used to drive student success. The diverse approaches to creating learner profiles raise a wide range of questions about their development. Which constructs should be included in learner profiles? How should those constructs be operationalised and measured? How valid are such operationalisations? How scalable and time-consuming is the collection of learner profile data? This session gathers experts working in the learner profile area to share examples and explore these key questions.

BACKGROUND

Within the educational sector, there has been growing momentum for assessing the growth and progress of learners’ abilities and knowledge over time. The push for reporting on learning progress over static assessment scores is mirrored in recent government initiatives and reports, such as Gonski et al. (2018) and Masters (2014). These reports emphasise the need for a development-oriented mindset instead of an assessment-oriented mindset and the need to assess learning progressions rather than performances at specific time points. Such data is typically collected in learner profiles that contain information about students’ learning processes, skill and competency development and learning progression. Importantly, these reports also highlight the need to use data-driven approaches to improve student learning. Research in learning analytics shows significant work around profiling students and developing their profiles. Initially, the focus was on identifying students at risk of attrition or academic performance to model study strategies and pathways. LA studies tend to utilise learning traces generated from education technologies (such as learning management systems) to profile learners based on their strategy adoption and other motivational and demographic information (Zamecnik et al., 2022). The trace data collected from these environments are longitudinal, provide fine-grained information about students’ learning, and represent an important source for learner profile development.

OBJECTIVES OF THE SESSION

The participants can expect to critically engage in the discussion around the development of learner profiles across secondary and tertiary school settings. The session’s objective is to discuss ways in which learning analytics could be used to support their development and adoption at scale. We will discuss questions such as: What should be included in the learner profile? How should profiles be populated? What is the validity behind constructs in the profile? What should be the purpose of learner profiles? How scalable are current profiling approaches? How should profiles be integrated into the curriculum?

DESIGN OF THE SESSION

The session will run 90 minutes and consist of two parts: In the first part (60 min), four 10-min presentations, each followed by a short 5-minute table discussion. In the second part of the session (30 min), we will have an open panel discussion with the presenters, moderated by the session organisers. The audience will have the opportunity to ask questions as we discuss the key points from each presentation. The presenters will represent different perspectives on learner profile development from different educational sectors.

INTENDED AUDIENCE

The intended audience for the session consists of educational practitioners and researchers, broadly from learning analytics, educational technology, educational policy and assessment domains. The audience would shape the conversation around learner profiles, and identify challenges and opportunities for future research in this area.

RESOURCES REQUIRED

There will be no specific resources needed for running the session.

ORGANISER BIOS

Vitomir Kovanovic is a Senior Lecturer at the University of South Australia, investigating the use of learning analytics for supporting teacher decision-making. He is also Co-editor-in-Chief of the Journal of Learning Analytics (JLA) and was the Program Co-chair of the LAK20 conference.

Simon Buckingham Shum is Professor of Learning Informatics at the University of Technology Sydney, where he directs the Connected Intelligence Centre, inventing and evaluating tools to provide personalised, data-driven feedback to students. His research focuses on the human-dimensions of designing and embedding such tools into practice.

REFERENCES

Gonski, D., Arcus, T., Boston, K., Gould, V., Johnson, W., O’Brien, L., Perry, L.-A., & Roberts, M. (2018). Through Growth to Achievement: Review to Achieve Educational Excellence in Australian Schools. Department of Education and Training, Australian Government.

Masters, G. N. (2014). Towards a growth mindset in assessment. Practically Primary, 19(2), 4–7.

Zamecnik, A., Kovanović, V., Joksimović, S., & Liu, L. (2022). Exploring non-traditional learner motivations and characteristics in online learning: A learner profile study. Computers and Education: Artificial Intelligence, 3, 100051. https://doi.org/10.1016/j.caeai.2022.100051